In Part 1, we discussed why Project Upstream as currently setup is problematic, despite the goals of Upstream being absolutely worth pursuing. In this part, I go more into offering solutions. Had I been involved with Upstream earlier on, this would have been my advice, but then, nobody asked me.

🙂

Basically, my take is that Upstream should become part of the MLS itself, at once transforming the fundamental relationship between the MLS and the brokerage to what it should be, while delivering all of the benefits (and then some) of data management in an ever-increasingly complex world. This is not science fiction or fantasy; the technology to make this happen exists today, and it can be implemented in a matter of weeks and months rather than years and years, with zero coordination that makes antitrust regulators verrrrry interested.

Let’s get into it.

Brief Recap of Part 1

I support the goals of Project Upstream:

- Improved brokerage efficiency via single point of entry for all listings data

- Improved data management by avoiding replication of data and multiple database formats

- Broker control over distribution of listings and data

Every single one of these are necessary efficiency improvements in the real estate world. I’m all for those goals.

The problem arises because Upstream, as currently conceived, is a company (UpstreamRE, LLC) owned by a number of brokers and franchise companies, which will work with NAR to create a single national database. As WAV Group’s release said, “Firms participating in Upstream will individually manage their data in a common format and in a common database.”

That single national database, operated and managed by an entity in partnership with NAR, cannot help but invoke all manner of government inquiry. It has been made clear over and over again that Upstream is not the MLS, and does not seek to replace the MLS. Furthermore, one of the biggest value propositions of Upstream is to control the flow of listing information to consumers. That makes Upstream extremely vulnerable to being classified as a “public utility” which all but guarantees in-depth regulation by state and/or federal regulators.

There is a better way.

The MLS Can and Should Deliver Upstream Benefits

The first place we begin is to note that most of the data management gains that Upstream brokers want can and should be part and parcel of the MLS itself.

Remember that I was deeply involved with bringing RPR’s AMP to reality. Working with Tim Dain, a visionary MLS leader, who is now with Austin’s ACTRIS MLS, we put together a vision for the MLS that I laid out in this post. A huge part of that vision is improving efficiency for the brokerages by enabling the “front end of choice” but I specifically mentioned Upstream and how it can/should be built into the MLS itself:

A way to think about [the modular MLS] is to consider Project Upstream in a Modular MLS world. The stated goals of Project Upstream are for brokerages (particularly the larger ones who belong to The Realty Alliance and LeadingRE) to have more control over the flow of data to the MLS and to the portals. (There’s some politics there too, but no technology will resolve those….) Well, with the Modular MLS, there’s no need for brokerages large or small to spend any time and treasure on building something “upstream” of the MLS. The MLS is happy to be both upstream of and downstream from the brokerage (or anyone else, for that matter). It’s like building Upstream right into the MLS “system” itself, from the very start, right at the core of what constitutes an MLS in the first place.

If you will, rather than brokerages being “downstream” of the MLS as it is today, or “upstream” of the MLS as they want for tomorrow, I’m suggesting that brokerages be “midstream” of the MLS because the MLS itself changes fundamentally.

How?

Customer-centric vs. Vendor-centric Data

The core principle is to implement what one might call “customer-centric” or “owner-centric” data platforms, as opposed to “vendor-centric” platforms as we have today. What the hell does that mean? Let me explain.

Today, almost all technology systems are “vendor-centric” in terms of how data is handled and managed. Think about something as fundamental as email. Today, you go on to Google, open a Gmail account, and then upload your contacts into Google’s software. If you want to use a CRM system, you have to upload your client list, notes, data into the vendor’s software system. Same with listings. Today, you have to go login to the MLS, and then Add a listing into the MLS’s database. If you need to make changes to the listing, then you go Edit that listing inside the MLS’s database. You might own the data, but until it’s inside a vendor’s database, there isn’t anything you can actually do with that data.

This is how virtually every software system in the world works today.

I said “virtually” because there are some key examples of software becoming separate from the vendor. With the Internet and the advent of advanced APIs (Application Programming Interface) that move data around, we have seen things like Google Maps whose data is not housed anywhere except in Google’s servers. Software vendors and websites have access to Google Maps data, but the data is never put into their databases. Facebook’s ecosystem also lets software access my Facebook data with my direct permission, but I never go to those software vendors and put my data into their databases.

The vision, then, is to move away from vendor-centric platforms to a customer-centric platform where the data is always owned and maintained by the customer. Vendors have access to that data, with the permission of the data owner, so that he can do stuff with his data using the vendor’s software, but there is no more “uploading” of data to any vendor’s database.

Upstream, Through The Modular MLS

The concept, then, is to achieve Project Upstream’s many worthwhile goals by working through the MLS itself. That requires the MLS become fully modular, and it starts at the database.

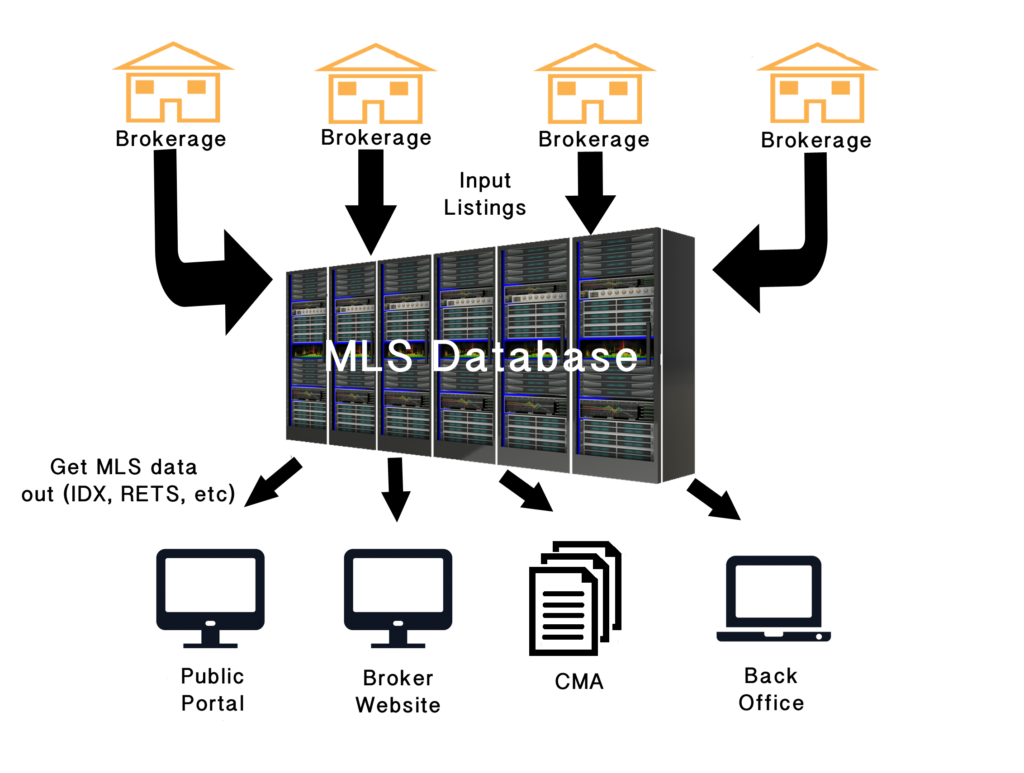

Forgive the terrible graphics here — I’m no graphic designer, that’s clear. But this is basically how the MLS of today works.

Today, there is such a thing as an MLS database. Brokers and agents input listings into this database through some sort of Add/Edit interface, and then get data out via means like RETS and IDX and VOW. Sometimes, the MLS itself hosts that database within a vendor’s platform, but on its own servers. Oftentimes, the MLS simply leaves the hosting up to the vendor (CoreLogic, FBS, etc.).

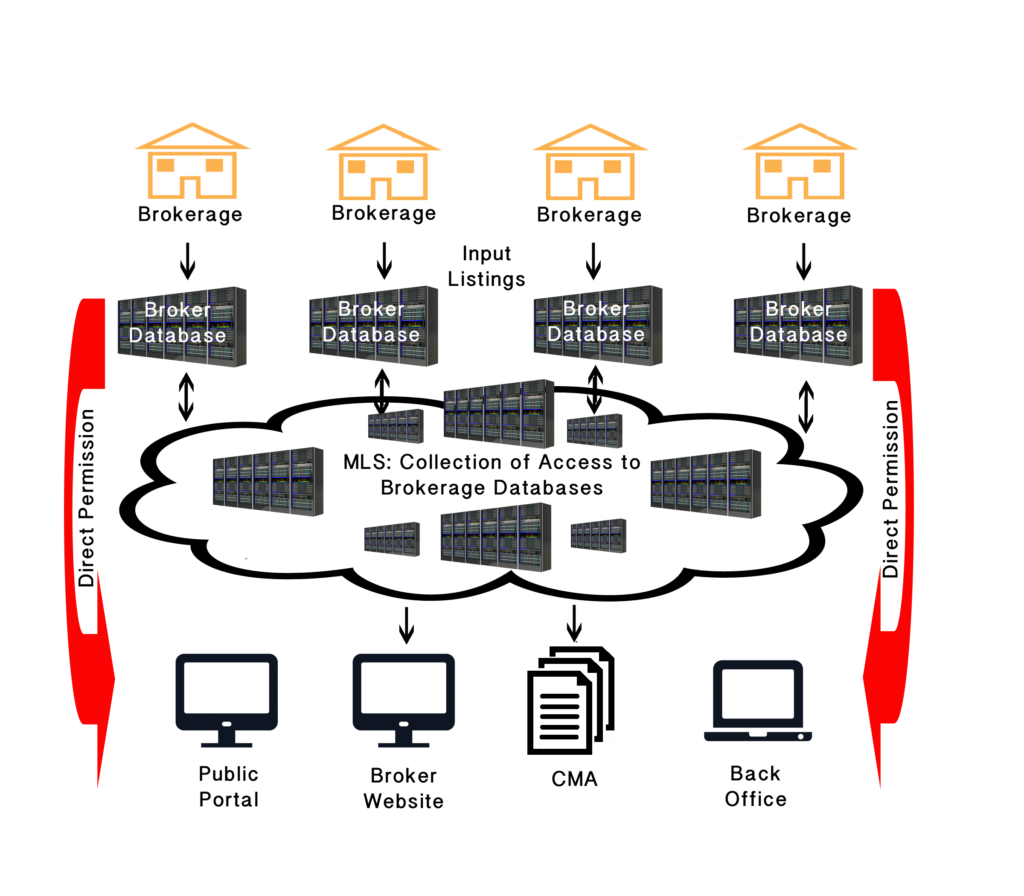

Under my proposal, that changes. The MLS no longer has a database; instead, it has a “meta-database” of pointers and permissions to a hundred, a thousand, or ten thousand brokerage databases.

Each brokerage has its own database of listings. Those listings never leave the broker’s database without the broker’s explicit permission. Instead, vendors — including the MLS — get access to the brokerage’s database to allow the broker to do what he wants done.

What I’m proposing is this:

Again, I apologize for the graphics but I — and a non-graphic designer friend — tried our best. Let me see if I can explain the main points.

No More MLS Database

The cloud above is supposed to represent the future: the MLS “database” disappears, and is replaced by a “meta-database” of pointers to brokerage databases and permissions from those brokerages to the MLS to access the data. So if there are 300 brokerages in the MLS, the MLS “database” contains permissions from and data access pointers to those 300 brokerage databases.

Those broker databases may be hosted by the MLS itself within its hosting platform, within the Cloud, or wherever the MLS stores data. Or, they may be hosted by the brokerage itself on its own servers, or in the Cloud, or wherever. For those brokerages who belong to national franchise companies, those franchisors might offer hosting for their franchisees as well. The simple point is that it does not matter where the Broker Database is hosted, as long as all of the databases utilize the same data management platform.

Part of that “platform” is a set of robust API’s — which should be RESO-compliant RETS API’s — that tells software where to find data. This technology exists today; it is not science fiction I’m writing here.

The MLS becomes a sort of an “address book” for telling software “If you need information on 123 Main St., from Brokerage XYZ, then go *here* to get the information.” Of course, for the software to get that information, it has to have permission from Brokerage XYZ to get that information. That permission is easily granted and revoked by Brokerage XYZ with this API structure. (Think of it working sort of like how Facebook and Twitter lets you grant access to some app or a game, then revoke permission too.)

In situations where the software needs data from the entire MLS (for example, IDX or syndication or market statistics reporting software), then the MLS acts as a “bridge” that grants permission from all of its brokerages pursuant to whatever rules and policies the MLS (with the consent of those brokerages) have promulgated. That’s the kind of thing that the MLS already knows how to do and does very well.

I’ll go into some detail on the technology concepts in the next post, but for this one, let’s focus on the business goals and benefits. The point is to leverage existing structure of the industry to deliver on the benefits and the promise of Upstream.

Single Point of Entry

Any “front-end of choice” type of system can deliver a “single point of entry” but this system goes way beyond that.

The issue that brokerages want to fix exists mostly for larger companies that have to span multiple MLSs. The current concept is to create a database that sits “upstream from” the MLS database. So by using a “single point of entry” into Upstream Database, and then sending listing data from Upstream Database into a MLS Database, these large brokerages want to avoid having different agents in different offices have to learn different MLS software systems.

That is a big step forward, of course, and one that I wholeheartedly support. But we can go further.

My current concept eliminates both the database that sits upstream from the MLS and the MLS database itself. Instead, brokerages are entering data into their own databases, and then granting access to those MLS’s that need it.

A large brokerage, such as HomeServices of America, would have a single HSA Database. If its offices belong to 400 different MLSs, it simply grants access to the appropriate MLS for the appropriate offices involved.

The other part of the single point of entry is that brokerages often have to share their listing information with other companies — especially franchise companies. Or maybe they want to send listing information to Zillow, or to a virtual tour company, or a direct mail marketing company. Today, that means entering the same damn listing seventeen times, or getting permission from the MLS and a data feed from the MLS to send to the franchise or to the marketing company. Ewww.

Under my proposed system, the brokerage simply gives access to whoever it wants. Are you a KW franchisee? Grant KWRI access to your Database; done. Do you need to have some flyers made? Grant the printing company access to your Database; done. Do you want to put your listings on Zillow? Grant Zillow access to your Database, or tell the MLS that Zillow is on your Approved List.

Again, instead of sending data hither and yon, you keep the data in your database, and give various vendors, websites, apps, and whoever else permission to access your data.

Data Management (I.E., Replication, Duplication, and Assorted Maladies)

That structure — all data is entered into and kept in the Brokerage Database and the information inside the Brokerage Database becomes the “Master Record” — eliminates almost all of the data management issues.

There is no replication, because the brokerage is no longer sending copies of its listings to anybody, including the MLS. Any edits to the listing data is done within the Brokerage Database itself, to the Master Record, and those changes are immediately communicated to all software, to all vendors using that listing information. If the edits happen at the vendor level, it is the Master Record inside the Brokerage Database that is being changed.

For example, one of the challenges today with syndication to portals is that the portals often get multiple entries for the same listing from different sources. Take Zillow for example. It might have a direct syndication deal with a brokerage, but also have a deal with the MLS. And the Agent might go into Zillow directly and update the listing record with new photos or something. Zillow has to decide which of those sources is the “correct” authoritative one. That creates replication and data integrity problems. Not under this model: the Master Record is always the correct and authoritative source. There is no other (or should be no other) listing record anywhere else.

Another common issue is timing. A buyer has put in a serious offer, and it was accepted. The listing agent changes the status of the listing from Active to Pending in the MLS. However, the MLS system refreshes its feeds every four hours. In the meantime, the brokerage has sent its feed to Zillow. Now you have the MLS record showing the property as Pending, while Zillow or IDX websites show it as Active. Problems ensue.

Under the “customer-centric” model, none of that happens. If an agent “goes into” the MLS and updates the status, she’s actually updating the Master Listing Record inside the Brokerage Database. That same record is being accessed by Zillow, by the MLS, by IDX companies, by everybody. Replication issues, gone. Data management, simplified.

Broker Control

And in case it isn’t perfectly clear by now, when the broker data lives inside the Brokerage Database, the broker has total, 100% absolute control over its listings. Remember, the broker’s listings don’t actually “go” anywhere. It isn’t “fed” to anybody. Instead, vendors are granted access to the listings.

Has your local MLS set up a direct syndication feed to “HomesandHotChicks.com” against your wishes? No problem; just revoke any permission to “HomesandHotGirls.com” or even to the MLS that did such a stupid thing. No more listings “fed” to that website.

A number of brokerages want to create AVM’s using each other’s listing information, but the MLS won’t let them? No problem. Everybody has access to the same data API’s that the MLS itself has; just grant each other permission and voila! Broker AVM has access to the data.

Short of a government mandate to send listings somewhere, the broker has completely control over its listing data, and no vendor, no website, no organization anywhere can do anything about it. The broker controls the data, because it owns that data, and because it owns/controls the Brokerage Database within which that data resides.

By the way, remember that Upstream was supposed to be the “nuclear option” that can be deployed against an obstreperous MLS? That if an MLS is just… doing all kinds of crap that pisses off the brokerages, Upstream can cut them off and move the listings to another MLS? That remains in place with this system. The brokerage controls its listings, period, end of story. If one or more MLSs are implementing policies that piss off the brokerage, it would be even easier to revoke the access rights of one MLS and turn on access rights of a different MLS.

Consumer Access to Listing Information and the Public Utility Problem

As you will recall, in Part 1, I began by worrying that the way that Upstream was setup today opens the door wide to being classified as a public utility. That danger is much, much less under this system. (I can’t say it’s eliminated, because the government could do whatever the hell it wants to do at the end of the day….)

First, this “broker control over data” is happening inside the MLS itself. The MLS has already been through the trial by fire in 2006 and Congress chose not to classify the MLS as a public utility. Its core functionality — enabling cooperation and compensation amongst brokerages — has not changed, even if the “MLS database” has become a “meta-database” of permissions to thousands of brokerage databases. The argument that prevailed in 2006 should prevail in 2015.

In a very real way, this sort of “Upstream Within the MLS” is not much different from the way that the MLS has used ListHub as the syndication vehicle for years and years. This API-based platform is just an evolution of that.

Second, there is far less of a specter of “coordinated action”. Suppose that Zillow does something that really pisses off 90% of the brokerages. Under the currently proposed Upstream model, UpstreamRE, LLC., the gatekeeper of the national common database, which is operated by NAR’s wholly-owned subsidiary, brokers start disabling data feeds to Zillow from within this single “national database” to Zillow. That smacks of anti-trust, of coordinated action, of collusion, and so on and so forth. The DoJ and the FTC would be on that like white on rice.

But under the distributed Brokerage Database system, there is no concerted action. There is no coordination. Each of the thousands of brokerages revoke access to Zillow independently. As long as there is no meeting at NAR Midyear with brokers getting up and making long speeches about how “we” have to turn off access to Zillow, there’s far less concern about anti-competitive actions.

Would the MLS Do This?

Of course, the $64,000 Question is whether the MLS would go along with this scheme. Would they really “let go” of the so-called control that having brokers and agents upload their listings directly into the MLS Database gives the MLS?

I think the answer is a qualified Yes.

The MLS CEO’s I know best — people like Art Carter at CRMLS, David Charron at MRIS, and Merri Jo Cowen at MFRMLS (all clients past and present) — believe in their hearts that the MLS exists to serve the brokers. They recognize clearly that the MLS can’t act with arrogance, as if it can dictate terms to the brokerages. I think those MLS’s would absolutely enable as much of the Upstream functionality as they are technologically able to do so.

Some MLS’s may prove hesitant, recalcitrant, or (that wonderful WAV Group word) obstreperous. But those MLSs have their days numbered. Whether it’s through this modular MLS approach, or through the current Upstream model of single national database, those MLSs will find themselves cut off and alienated more and more from the brokerages without whom they cannot exist.

So one way or the other, with enthusiasm and support or grudgingly because they have no choice, the MLS will go along with this system if that is what the brokerages demand.

Conclusion, and Part 3

I had hoped to get to how this modular MLS system can be done today. Because this isn’t science fiction. It’s not fanciful hoping. The technology to do this exists today. But I’m at almost 3,500 words. I guess I’ll tackle that in Part 3.

But the high-level strategic advice remains the same: do Upstream from within the MLS itself. The MLS can deliver all of the benefits that brokerage want from Upstream, including the “nuclear option”, with minimal downside risk from .GOV because it’s been through the wringer already.

This is the way to support the goals of Project Upstream. It may not be the only way, but I’m convinced that it’s the best way.

-rsh

6 thoughts on “On Project Upstream (Part 2): The Path Forward”

Rob – As always love your comments. A few thoughts as I have tried to sift through all this in the past few months.

1) Where does ‘Sold’ data reside in either system. Currently when a record is created in an MLS it seems clear that record remain property of the MLS and thus the Sold data is available to the Participants of that MLS. Thus a valuable long term asset is created. In the new models who ‘owns’ or even holds that Sold data? Seems more problematic on either model.

2) How is governance achieved in either model if the MLS is tasked with ‘enforcement’ of the rules but has no authority to alter a record or a tool for compliance. If all data is entered at an Upstream sight and there exists a problem (ie. phone numbers or website in remarks or bad photos or no compensation even listed) how does an MLS or any governance entity enforce its rules when the entry is now done Upstream with the MLS effectively just pulling a feed?

I agree we need to look seriously at a new model and it should serve the Brokers. But if the end goal is just to get the data to the portals there is little or no real role for an MLS. If the goal is to leave the MLS in the governance and compensation position as a BTB service, then how does any Upstream application allow the MLS the operational ability it likely needs to serve that role.

Great questions, Scott. My first observation is that unless somehow I’m elected as the Czar of All Things MLS, each local MLS will need to make some of those decisions. (Of course, as a consultant, I’d be happy to help, *grin*.) But my personal thoughts on these…

1. “Sold” data is a tricky area. But my take on it is that the broker owns the sold data, just the same as it owns the listing. Any other approach is, I think, provocative and reverses the relationship between the MLS and the brokerage. We can’t forget that the MLS is fundamentally a cooperative of brokerages; the brokerage is not the data-gathering contractor for the MLS.

In either model, sold data will be an issue that the MLS will have to address. But under my model, it is the local brokerages that make up the local MLS that makes that decision, with no involvement by a national entity. It might not make a difference, but I think it does in the minds of Federal anti-trust folks and government regulators.

2. I actually don’t see an issue with enforcement. The MLS is fundamentally a rules & enforcement entity. There is no requirement that the MLS be able to alter a record; it simply needs to (a) identify that a problem exists, then (b) go through its enforcement mechanisms to ensure that the Participant makes the appropriate changes.

Brokerages join the MLS in order to have rules and to have those rules enforced. If they’re not buying into that, then yeah, the MLS is going to have to kick that brokerage out. The whole point of the MLS is a group of brokerages agreeing with each other to various rules and regulations, and agreeing with each other that they will abide by them. It’s like the 32 teams of the NFL coming together with rules and agreeing to abide by those rules. The MLS, then, is kind of like the referee.

Your larger question is the most interesting one: “If the goal is to leave the MLS in the governance and compensation position as a BTB service, then how does any Upstream application allow the MLS the operational ability it likely needs to serve that role.”

I’ve written and spoken about this in the past, but I’ll summarize it like this. The MLS’s role has to change. The MLS may have been thought of as a “technology and data provider” in the past. No more. The MLS has to be seen, and see itself, as the Lawgiver that creates and operates a Marketplace for the benefit of the Participants. Governance, rules, enforcement, cooperation and compensation — none of these change. Data storage and data technology become secondary to the core purpose of the MLS: to help the Participant brokers create and operate an orderly Marketplace so that they can make a living.

I know that’s short; maybe we’ll need to expand on that idea a lot more in the future.

Scott Hughes … To answer your question regarding “sold” data, it’s best to separate the actual property record database from the marketplace (which would record transaction history).

AMP (property record / community database)

-> Upstream (offers to sell AMP property with syndication)

-> MLS (aggregation of AMP + Upstream data distributed to local participants [marketplace])

If I were an MLS today, I would do what Zillow just did in their dotloop aquisition. Recognize that your value proposition is not being the database, but being the marketplace. As such, I’d invest in faciliating transactions and shift from dues-based subscription model to a transaction-based model.

Think of this. MLS total addressable market (potential revenue) in the U.S. with member model with 1 – 1.5 million users and ~$25/mo = $25 million/mo = $300 million/yr (gross estimate). Now with over 5 million transactions per year in residential alone, say $5 to list and $150/side to close. Assume all 2-sided for simplicity. $305/transaction * 5 million = $1.525 billion/yr. This is what excites me.

Rob, sorry for the shameless plug but see two of my past blog posts. The tea leaves are clear and I hope the MLSs can read them and get together to maintain the most valuable asset, the marketplace. 😉 Perhaps some scrappy entrepreneurs can help them do that.

– http://blog.goomzee.com/2015/05/29/the-industry-really-needs-two-upstreams/

– http://blog.goomzee.com/2013/02/21/is-there-a-future-for-brokers-and-mls-i-think-so/

I was thinking about flagging this as comment spam… ????

🙂 See you in Kansas City or San Diego…

Real estate broker driven inventories will go bye-bye by 2030.

a.

Comments are closed.